OpenFlow is a communication protocol originally introduced by researchers at Stanford University in 2008. It allows the control plane to interact with the forwarding plane of a network device, such as a switch or router.

OpenFlow separates the forwarding plane from the control plane. This separation allows for more flexible and programmable network configurations, making it easier to manage and optimize network traffic. Think of it like a traffic cop directing cars at an intersection. OpenFlow is like the communication protocol that allows the traffic cop (control plane) to instruct the cars (forwarding plane) where to go based on dynamic conditions.

How Does OpenFlow Relate to SDN?

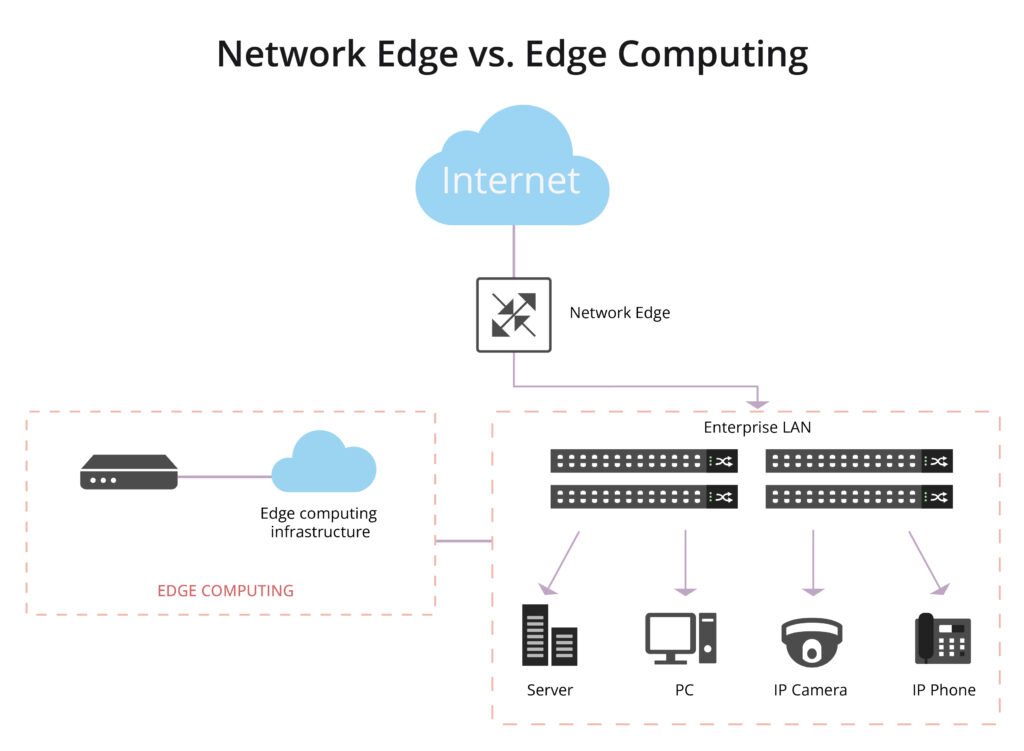

OpenFlow is often considered one of the key protocols within the broader SDN framework. Software-Defined Networking (SDN) is an architectural approach to networking that aims to make networks more flexible, programmable, and responsive to the dynamic needs of applications and services. In a traditional network, the control plane (deciding how data should be forwarded) and the data plane (actually forwarding the data) are tightly integrated into the network devices. SDN decouples these planes, and OpenFlow plays a crucial role in enabling this separation.

OpenFlow provides a standardized way for the SDN controller to communicate with the network devices. The controller uses OpenFlow to send instructions to the switches, specifying how they should forward or process packets. This separation allows for more dynamic and programmable network management, as administrators can control the network behavior centrally without having to configure each individual device.

” Also Check – What Is Software-Defined Networking (SDN)?

How Does OpenFlow Work?

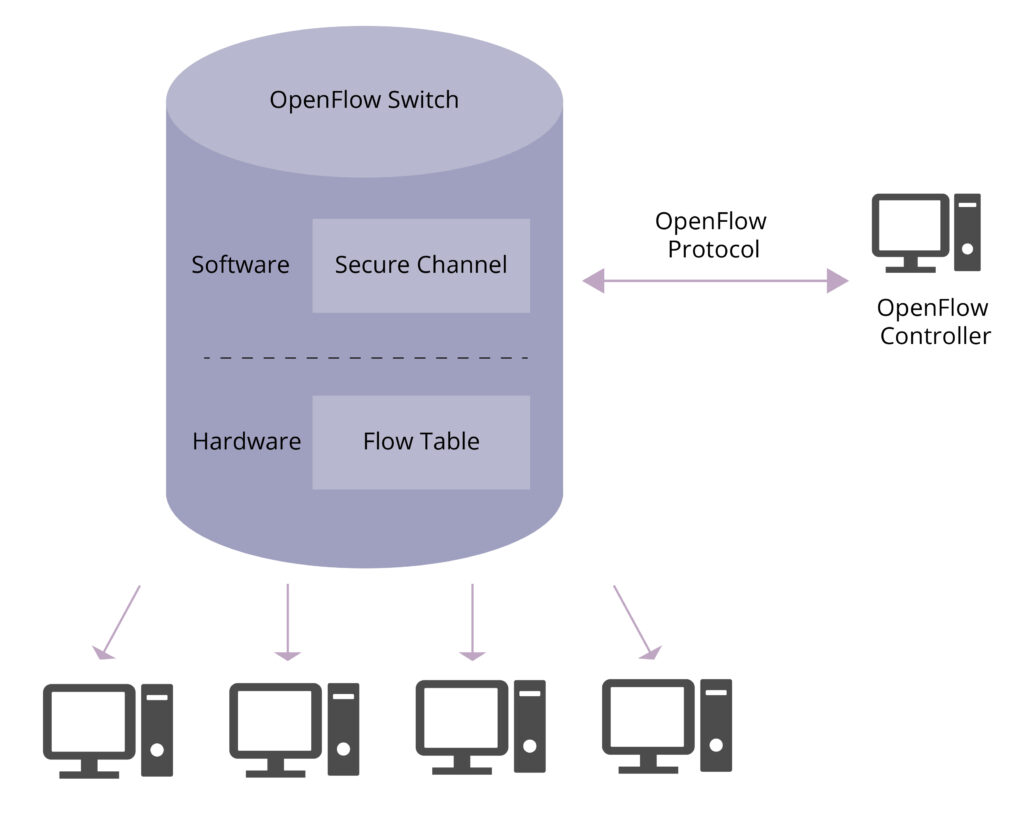

The OpenFlow architecture consists of controllers, network devices and secure channels. Here’s a simplified overview of how OpenFlow operates

Controller-Device Communication:

- An SDN controller communicates with network devices (usually switches) using the OpenFlow protocol.

- This communication is typically over a secure channel, often using the OpenFlow over TLS (Transport Layer Security) for added security.

Flow Table Entries:

- An OpenFlow switch maintains a flow table that contains information about how to handle different types of network traffic. Each entry in the flow table is a combination of match fields and corresponding actions.

Packet Matching:

- When a packet enters the OpenFlow switch, the switch examines the packet header and matches it against the entries in its flow table.

- The match fields in a flow table entry specify the criteria for matching a packet (e.g., source and destination IP addresses, protocol type).

Flow Table Lookup:

- The switch performs a lookup in its flow table to find the matching entry for the incoming packet.

Actions:

- Once a match is found, the corresponding actions in the flow table entry are executed. Actions can include forwarding the packet to a specific port, modifying the packet header, or sending it to the controller for further processing.

Controller Decision:

- If the packet doesn’t match any existing entry in the flow table (a “miss”), the switch can either drop the packet or send it to the controller for a decision.

- The controller, based on its global view of the network and application requirements, can then decide how to handle the packet and send instructions back to the switch.

Dynamic Configuration:

Administrators can dynamically configure the flow table entries on OpenFlow switches through the SDN controller. This allows for on-the-fly adjustments to network behavior without manual reconfiguration of individual devices.

” Also Check – Open Flow Switch: What Is It and How Does It Work

What are the Application Scenarios of OpenFlow?

OpenFlow has found applications in various scenarios. Some common application scenarios include:

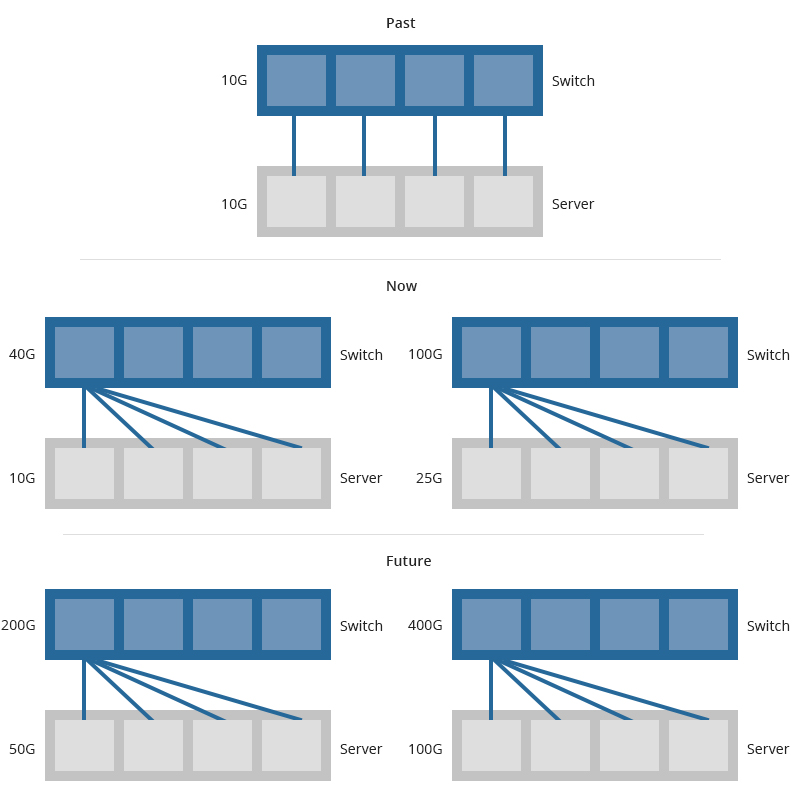

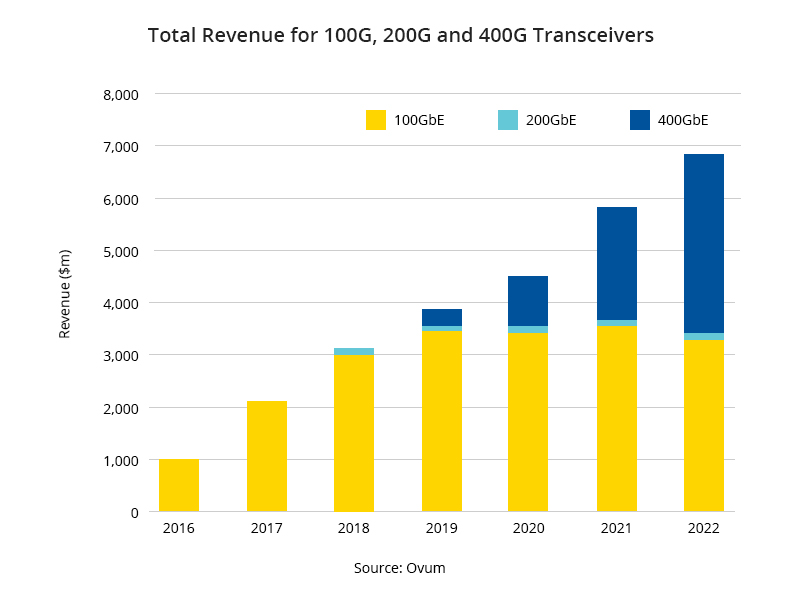

Data Center Networking

Cloud data centers often host multiple virtual networks, each with distinct requirements. OpenFlow supports network virtualization by allowing the creation and management of virtual networks on shared physical infrastructure. In addition, OpenFlow facilitates dynamic load balancing across network paths in data centers. The SDN controller, equipped with a holistic view of the network, can distribute traffic intelligently, preventing congestion on specific links and improving overall network efficiency.

Traffic Engineering

Traffic engineering involves designing networks to be resilient to failures and faults. OpenFlow allows for the dynamic rerouting of traffic in the event of link failures or congestion. The SDN controller can quickly adapt and redirect traffic along alternative paths, minimizing disruptions and ensuring continued service availability.

Networking Research Laboratory

OpenFlow provides a platform for simulating and emulating complex network scenarios. Researchers can recreate diverse network environments, including large-scale topologies and various traffic patterns, to study the behavior of their proposed solutions. Its programmable and centralized approach makes it an ideal platform for researchers to explore and test new protocols, algorithms, and network architectures.

In conclusion, OpenFlow has emerged as a linchpin in the world of networking, enabling the dynamic, programmable, and centralized control that is the hallmark of SDN. Its diverse applications make it a crucial technology for organizations seeking agile and responsive network solutions in the face of evolving demands. As the networking landscape continues to evolve, OpenFlow stands as a testament to the power of innovation in reshaping how we approach and manage our digital connections.