In an era where enterprise networks must support an increasing array of connected devices, agility and scalability in networking have become business imperatives. The shift towards open networking has catalyzed the rise of bare metal switches within corporate data networks, reflecting a broader move toward flexibility and customization. As these switches gain momentum in enterprise IT environments, one may wonder, what differentiates bare metal switches from their predecessors, and what advantages do they offer to meet the demands of modern enterprise networks?

What is a Bare Metal Switch?

Bare metal switches are originated from a growing need to separate hardware from software in the networking world. This concept was propelled mainly by the same trend within the space of personal computing, where users have freedom of choice over the operating system they install. Before their advent, proprietary solutions dominated, where a single vendor would provide the networking hardware bundled with their software.

A bare metal switch is a network switch without a pre-installed operating system (OS) or, in some cases, with a minimal OS that serves simply to help users install their system of choice. They are the foundational components of a customizable networking solution. Made by original design manufacturers (ODMs), these switches are called “bare” because they come as blank devices that allow the end-user to implement their specialized networking software. As a result, they offer unprecedented flexibility compared to traditional proprietary network switches.

Bare metal switches usually adhere to open standards, and they leverage common hardware components observed across a multitude of vendors. The hardware typically consists of a high-performance switching silicon chip, an essential assembly of ports, and the standard processing components required to perform networking tasks. However, unlike their proprietary counterparts, these do not lock you into a specific vendor’s ecosystem.

What are the Primary Characteristics of Bare Metal Switches?

The aspects that distinguish bare metal switches from traditional enclosed switches include:

Hardware Without a Locked-down OS: Unlike traditional networking switches from vendors like Cisco or Juniper, which come with a proprietary operating system and a closed set of software features, bare metal switches are sold with no such restrictions.

Compatibility with Multiple NOS Options: Customers can choose to install a network operating system of their choice on a bare metal switch. This could be a commercial NOS, such as Cumulus Linux or Pica8, or an open-source NOS like Open Network Linux (ONL).

Standardized Components: Bare metal switches typically use standardized hardware components, such as merchant silicon from vendors like Broadcom, Intel, or Mellanox, which allows them to achieve cost efficiencies and interoperability with various software platforms.

Increased Flexibility and Customization: By decoupling the hardware from the software, users can customize their network to their specific needs, optimize performance, and scale more easily than with traditional, proprietary switches.

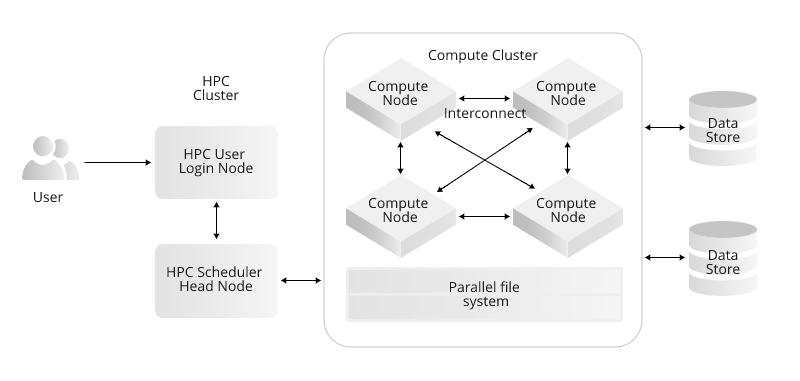

Target Market: These switches are popular in large data centers, cloud computing environments, and with those who embrace the Software-Defined Networking (SDN) approach, which requires more control over the network’s behavior.

Bare metal switches and the ecosystem of NOS options enable organizations to adopt a more flexible, disaggregated approach to network hardware and software procurement, allowing them to tailor their networking stack to their specific requirements.

Benefits of Bare Metal Switches in Practice

Bare metal switches introduce several advantages for enterprise environments, particularly within campus networks and remote office locations at the access edge. It offers an economical solution to manage the surging traffic triggered by an increase of Internet of Things (IoT) devices and the trend of employees bringing personal devices to the network. These devices, along with extensive cloud service usage, generate considerable network loads with activities like streaming video, necessitating a more efficient and cost-effective way to accommodate this burgeoning data flow.

In contrast to the traditional approach where enterprises might face high costs updating edge switches to handle increased traffic, bare metal switches present an affordable alternative. These devices circumvent the substantial markups imposed by well-known vendors, making network expansion or upgrades more financially manageable. As a result, companies can leverage open network switches to develop networks that are not only less expensive but better aligned with current and projected traffic demands.

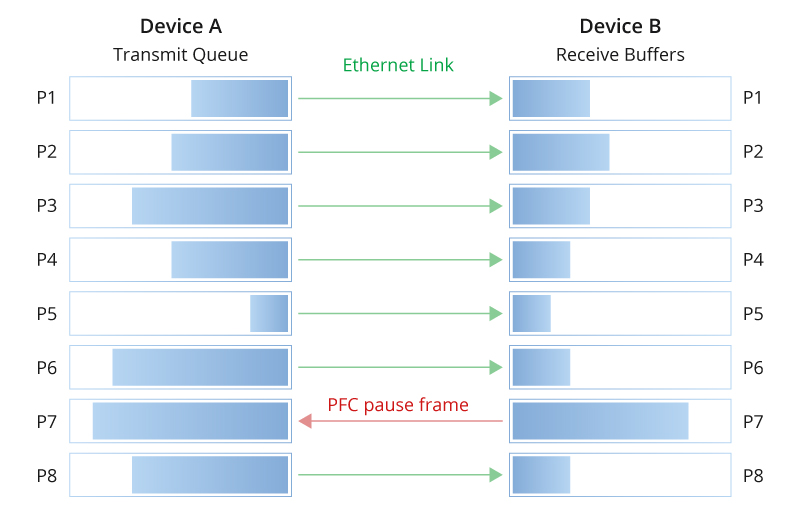

Furthermore, bare metal switches support the implementation of the more efficient leaf-spine network topology over the traditional three-tier structure, consolidating the access and aggregation layers and often enabling a single-hop connection between devices, which enhances connection efficiency and performance. With vendors like Pica8 employing this architecture, the integration of Multi-Chassis Link Aggregation (MLAG) technology supersedes the older Spanning Tree Protocol (STP), effectively doubling network bandwidth by allowing simultaneous link usage and ensuring rapid network convergence in the event of link failures.

Building High-Performing Enterprise Networks

FS S5870 series of switches is tailored for enterprise networks, primarily equipped with 48 1G RJ45 ports and a variety of uplink ports. This configuration effectively resolves the challenge of accommodating multiple device connections within enterprises. S5870 PoE+ switches offer PoE+ support, reducing installation and deployment expenses while amplifying network deployment flexibility, catering to a diverse range of scenario demands. Furthermore, the PicOS License and PicOS maintenance and support services can further enhance the worry-free user experience for enterprises. Features such as ACL, RADIUS, TACACS+, and DHCP snooping enhance network visibility and security. FS professional technical team assists with installation, configuration, operation, troubleshooting, software updates, and a wide range of other network technology services.